This article is a chapter from my new book Explain the Cloud Like I'm 10. The first release was written specifically for cloud newbies. I've made some updates and added a few chapters—Netflix: What Happens When You Press Play? and What is Cloud Computing?—that level it up to a couple ticks past beginner. I think even fairly experienced people might get something out of it.

So if you are looking for a good introduction to the cloud or know someone who is, please take a look. I think you'll like it. I'm pretty proud of how it turned out.

I pulled this chapter together from dozens of sources that were at times somewhat contradictory. Facts on the ground change over time and depend who is telling the story and what audience they're addressing. I tried to create as coherent a narrative as I could. If there are any errors I'd be more than happy to fix them. Keep in mind this article is not a technical deep dive. It's a big picture type article. For example, I don't mention the word microservice even once :-)

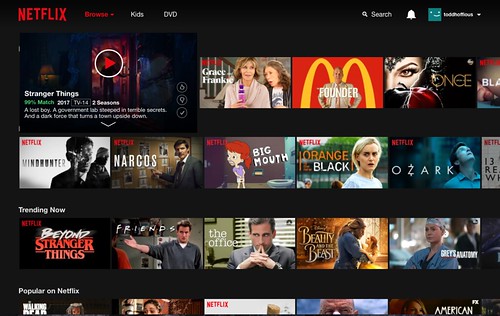

Netflix seems so simple. Press play and video magically appears. Easy, right? Not so much.

Given our discussion in the What is Cloud Computing? chapter, you might expect Netflix to serve video using AWS. Press play in a Netflix application and video stored in S3 would be streamed from S3, over the internet, directly to your device.

A completely sensible approach…for a much smaller service.

But that’s not how Netflix works at all. It’s far more complicated and interesting than you might imagine.

To see why let’s look at some impressive Netflix statistics for 2017.

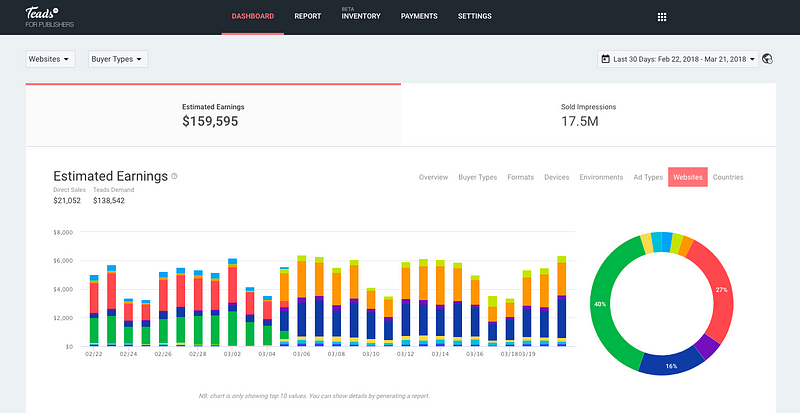

- Netflix has more than 110 million subscribers.

- Netflix operates in more than 200 countries.

- Netflix has nearly $3 billion in revenue per quarter.

- Netflix adds more than 5 million new subscribers per quarter.

- Netflix plays more than 1 billion hours of video each week. As a comparison, YouTube streams 1 billion hours of video every day while Facebook streams 110 million hours of video every day.

- Netflix played 250 million hours of video on a single day in 2017.

- Netflix accounts for over 37% of peak internet traffic in the United States.

- Netflix plans to spend $7 billion on new content in 2018.

What have we learned?

Netflix is huge. They’re global, they have a lot of members, they play a lot of videos, and they have a lot of money.

Another relevant factoid is Netflix is subscription based. Members pay Netflix monthly and can cancel at any time. When you press play to chill on Netflix, it had better work. Unhappy members unsubscribe.

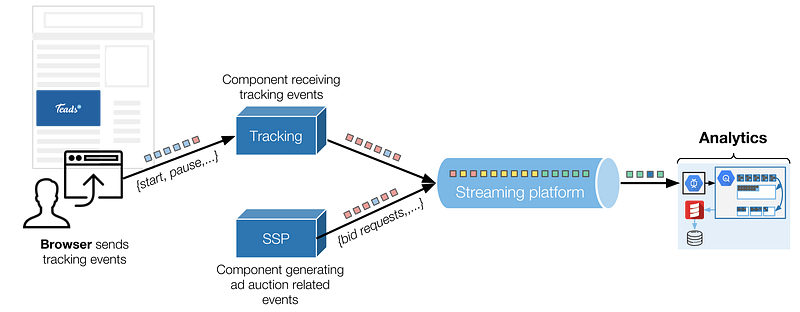

Netflix operates in two clouds: AWS and Open Connect.

How does Netflix keep their members happy? With the cloud of course. Actually, Netflix uses two different clouds: AWS and Open Connect.

Both clouds must work together seamlessly to deliver endless hours of customer-pleasing video.

The three parts of Netflix: client, backend, CDN.

You can think of Netflix as being divided into three parts: the client, the backend, and the CDN.

The client is the user interface on any device used to browse and play Netflix videos. It could be an app on your iPhone, a website on your desktop computer, or even an app on your Smart TV. Netflix controls each and every client for each and every device.

Everything that happens before you hit play happens in the backend, which runs in AWS. That includes things like preparing all new incoming video and handling requests from all apps, websites, TVs, and other devices.

Everything that happens after you hit play is handled by Open Connect. Open Connect is Netflix’s custom global content delivery network (CDN). When you press play the video is served from Open Connect. Don’t worry; we’ll talk about what this means later.

Interestingly, at Netflix they don’t actually say hit play on video, they say clicking start on a title. Every industry has its own lingo.

By controlling all three areas—client, backend, CDN— Netflix has achieved complete vertical integration.

Netflix controls your video viewing experience from beginning to end. That’s why it just works when you click play from anywhere in the world. You reliably get the content you want to watch when you want to watch it.

Let’s see how Netflix makes that happen.

In 2008 Netflix Started Moving To AWS

Click to read more ...

Todd Hoff

Todd Hoff